AI. Finally, a Reason for My Homelab

For the past decade I’ve been running some form of a home lab. It started as a playground to experiment with various projects, and has provided a learning ground for testing and breaking projects. I’ve often spent more time fixing than building, but that’s part of the fun.

Fast forward, 10 years, there are many awesome tutorials and videos from people doing useful things with their homelab. From Jeff Geerling awesome homelab guides, with practical tips on running a home server for media content, Christian Lempa, has a great overview of running home assistant and various VMs and Kubernetes servers among other things.

At work we’ve an internal #homelab discussion channel, I’ve been sharing internally and with other friends and wanted to put it all into this blog post.

Why is a dedicated Local AI lab worth it?

- Lower Power AI

- Cost

- Local Processing

- Experimentation

- Unhinged AI

- Multimodal: Wisper / Flux.1 / Segment Anything Model 2

This AI server’s gone rogue, ditching its fancy data center for a gritty concrete shelf - talk about unhinged intelligence with an edge(y) new home!

The Hardware

For Hardware, I’ve opted to repurpose a low-power AMD server. I was previously running a low TPU CPU, but I’ve quickly started to hit cache limits and have instead opted for an AMD Ryzen 5 5600X, which has a good amount of cores for a low cost per watt. I maxed out the RAM at 128GB, and opted for a more ‘business’ motherboard.

Since I wanted a 2U setup, I opted for the NVIDIA RTX 4000 Ada GPU. I opted for the NVIDIA RTX 4000 Ada which is both low profile and low power. It only has 20GB of RAM, and 6,144 CUDA cores. To overly simplify, the more RAM a card/ bunch of cards the larger parameter model it can run.

There are a few calculators out there to estimate GPU VRAM usage of transformer-based models. For current Summer 2024 Open-Source Models. Rough LLM Parameter Size to GPU Size Chart

| Card | RAM | Llama 3.1 Model | Gemma 2 Model |

|---|---|---|---|

| RTX 2080 Super | 8 GB | 8B (FP8 or INT4) | Gemma 2 2B (with optimizations) |

| RTX 4080 | 16 GB | 8B (FP16) | Gemma 2 2B |

| RTX 4090 | 24 GB | 8B (FP16), 70B (INT4) | Gemma 2 9B |

| NVIDIA RTX 4000 Ada SSF | 20 GB | 8B (FP16), 70B (INT4) | Gemma 2 9B (with slight optimizations) |

| NVIDIA RTX 5000 Ada | 32 GB | 70B (INT4) | Gemma 2 9B |

| NVIDIA RTX 6000 Ada | 48 GB | 70B (FP8) | Gemma 2 27B (with optimizations) |

| NVIDIA H100 | 80 GB | 70B (FP16) | Gemma 2 27B |

| 8 x NVIDIA A100 | 640GB | 405B (FP8) | Gemma 2 27B (full precision) and larger models |

In the world of home brew LLM builders, there are a few other options. Grabbing a few A100s off eBay, running Mac Minis, or using a mesh with exo, to combine a range of computers.

The Software

The current software stack is pretty basic, I’m currently running the machine as a traditional server without any extra virtualization.

OS & Graphics Drivers

I did this both for performance and to keep things simple. For example CUDA & GPU Docker Support works, but can be patchy. In the same vein, I recommend using a Linux OS with strong and well tested graphic drivers. Ubuntu Server + NVIDIA Container Toolkit is perfect for server setups, and I recommend using PopOS for Desktop use as it takes the yak shaving out of dealing with Nvidia Drives.

Docker

At the next layer of abstraction, I would recommend using Docker and NVIDIA Container Toolkit. Using a Docker workflow will keep you sane when testing other Open Source projects and dealing with the mess of Python and Python versions adopted by the community. If you want to avoid Docker, pyenv or anaconda* ( for commercial usage ) can help support you. This is my basic setup for Ollama, Open WebUI and Weviate running in Docker.

LLM Software

For managing the Large Language Models, I recommend using Ollama. There are many other hacky and open-source options, but Ollama is to LLM management for what Docker is to LXC Container. It just works. It helps download the weights, and will also aim to run and load balancer between the GPU Ram, CPU and RAM.

Connectivity

Since my 2U server, Duke, is banished to my crawl space. I needed a way to access it, when at home I used a simple SSH setup, but when out of home, I used Teleport. Both to access the ollama CLI and to access one of the few UI wrappers. Open UI has been perfect for me.

AI Demo

Here is a quick overview of how I access my homelab Duke Server.

Other Demos

Models & Model Performance

I currently switch between models for different purposes, but these are rundown of ones I use the most.

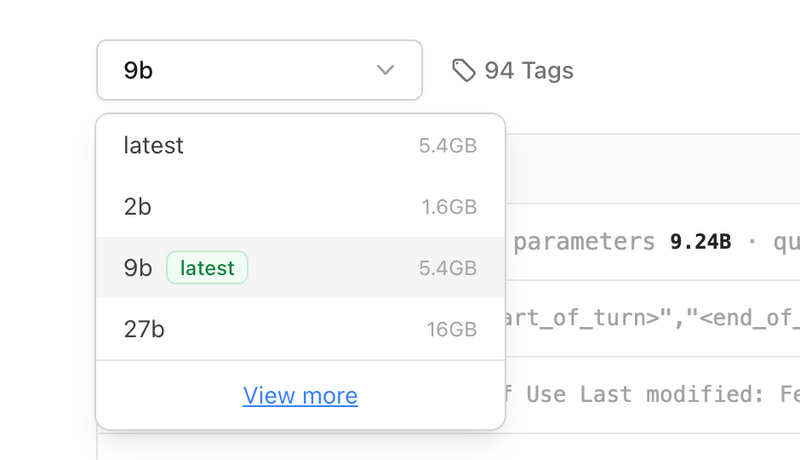

Tip: To try different floating point models, click the ‘View More’ link on Ollama.

Multimodal

Most LLMs focus on text based inputs, but many can also work with sounds, image and even robotics. I plan to explore this space more, now my test is steady. I plan on running Whisper for local text to voice and SAM 2 for video analysis.

IDE Integrations

I’m currently using GitHub Co-Pilot with VSCode. I also have the option to augment Cursor with my home server. This is something that’s on my todo list but I’m currently happy with the current support and setup of my models.

Privacy

An added benefit of having a local AI server is the ability to have complete control over data sent. This means I can happily chat with my tax returns, knowing I’m not sending all of my data to multiple 3rd parties.

Cost of the Server

| Part | Cost |

|---|---|

| GPU: NVIDIA RTX 4000 Ada GPU | $1,279* |

| CPU: AMD Ryzen 5 5600X | $133 |

| Ram: 128GB RAM | $187 |

| Motherboard: ASUS Pro B550M-C | $99 |

| SilverStone Technology 2U Dual Rack** | $169 |

| Disk: 1TB Nvme Disk | $80 |

| Banana | $0.40 |

| $1,907.4 |

* ~ There are a lot of older GPUs, NVIDIA Tesla etc, but these are still low in clock speed, vRAM and generally consume a lot more Power. If I was building a workstation/part-time gaming rig, I would opt for a 4090. A M2 Max Pro at $1,999, would also be a tempting option, but costs an eye-bleeding $4799 to have the same amount of RAM.

** ~ This case is terrible, there are many other 2U cases that are better and cheaper.

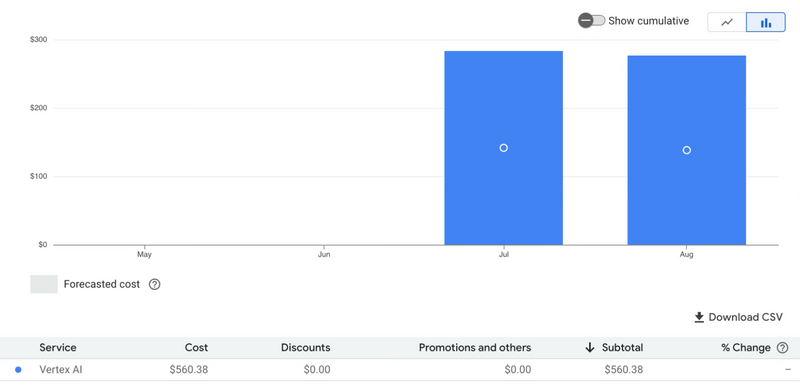

Considering I easily spent $560 on Google Vertex this summer. I feel Ok with the predictability of the current smaller but sustainable home rig setup.

Plans for Duke

Now I’ve Duke up and running, I’m planning to use it as a local test bed for new models. The current Docker + Ollama Flow works smoothly. Having the ability to connect to it while on the go, is prefect for my use case. I’m interested in using it as a potential hacky backend for a few web apps. I plan to share my experience once these are up and running.

Product Generalist

Currently in Oakland, CA